Sept. 11, 2001: “To honor those whose lives were lost, their families, and all who sacrifice that we may live in freedom. We will never forget.“

Reprinted with permission for the author only from the September 2011 ISSA Journal

Reprinted with permission for the author only from the September 2011 ISSA Journal

Prerequisites

SIFT 2.1 if you’d like a forensics-focused virtual machine with Volatility ready to go

Python version 2.6 or higher on Window, Linux, or Mac OS X

Some plugins require third party

libraries

Introduction

Two recent releases give cause for celebration and discussion in toolsmith. First, in July, Matthieu Suiche of MoonSols released

DumpIt for general consumption, a “fusion of win32dd and win64dd in one executable.” Running DumpIt on the target system generates a copy of the physical memory in the current directory. That good news was followed by Ken Pryor’s

post on the SANS Computer Forensics

Blog (I’m a regular reader, you should be too) mentioning the fact that Volatility 2.0 had been released in time for the Open Memory Forensics Workshop, and that

SIFT 2.1 was also available. Coincidence? I think not; Volatility 2.0 is available on SIFT 2.1. Thus, the perfect storm formed creating the ideal opportunity to discuss the complete life-cycle of memory acquisition and analysis for forensics and incident response. In May 2010, we discussed SIFT 2.0 and mentioned how useful Volatility is, but didn’t give its due. Always time to make up for our shortcomings, right?

If you aren't already aware of

Volatility, “the Volatility Framework is a completely open collection of tools, implemented in Python under the GPL, for the extraction of digital artifacts from volatile memory (RAM) samples.”

One thing I’ve always loved about writing

toolsmith is meeting people (virtually or in person) who share the same passion for and dedication to our discipline. Such is the case with the Volatility community.

As always, I reached out to project leads/contributors and benefited from very personal feedback regarding Volatility. Mike Auty and Michael Hale Ligh (

MHL) each offered valuable insight you may not glean from the impressive technical

documentation available to Volatility users.

Regarding the Volatility roadmap, Mike Auty indicated that the team has an ambitious goal for their next release (which they want to release in 6 months, a big change from their last release). They're hoping to add Linux support (as written by Andrew Case), as well as 64-bit support for Windows (still being written), and a general tidy up for the code base without breaking the API.

MHL offered the following:

“At the Open Memory Forensics Workshop (OMFW) in late July, many of the developers sat on a panel and described what got them involved in the project. Some of us are experts in disk forensics, wanting to extend those skills to memory analysis. Some are experts in forensics for platforms other than Windows (such as Linux, Android, etc.) who were looking for a common platform to integrate code. I personally was looking for new tools that could help me understand the Windows kernel better and make my training course on rootkits more interesting to people already familiar with running live tools such as GMER, IceSword, Rootkit Unhooker, etc. I think the open source nature of the project is inviting to new-comers, and I often refer to the source code as a Python version of the Windows Internals book, since you can really learn a lot about Windows by just looking at how Volatility enumerates evidence.”

Man, does that say it all! Stay with this thinking and consider this additional nugget of Volatility majesty from MHL. In his blog post specific to using Volatility to detect Stuxnet,

Stuxnet's Footprint in Memory with Volatility 2.0, he discusses Sysinternals tools side-by-side with artifacts identified with Volatility. MHL is dead on right when he says this may be of “interest your readers, especially those who have never heard of Volatility before, because it builds on something they do know - Sysinternals tools.”

This was an incredibly timely post for me as I read it right on the heels of hosting the venerable Mark Russinovich at the ISSA Puget Sound July chapter meeting where he presented Zero Day Malware Cleaning with the Sysinternals Tools, including live analysis of the infamous Stuxnet virus.

See how this all comes together so nicely?

Read Mark’s three posts on

Technet followed immediately by MHL’s post on his MNIN Security Blog, then explore Volatility for yourself; I’ll offer you some SpyEye analysis examples below.

NOTE: MHL was one of the authors of

Malware Analyst's Cookbook and DVD: Tools and Techniques for Fighting Malicious Code; I’ll let the reviews speak for themselves (there are ten reviews on Amazon and all are 5 stars). I share Harlan’s

take on the book and simply recommend that you buy it if this topic interests you.

Some final thoughts from AAron Walters, the principal developer and lead for Volatility:

“We have a hard working development team and it’s appreciated when people recognize the work that is being done. The goal was to build a modular and extendable framework that would allow researchers and practitioners come together and collaborate. As a result, shortening the amount of time it takes to get cutting edge research into the hands of practitioners. We also wanted to encourage and push the technical advancement of the digital forensics field which had frequently lagged behind the offensive community. It's amazing to see how far the project has come since I dropped the initial public release more than 4 years ago. With the great community now supporting the project, there are lot more exciting enhancements in the pipe line...”

DumpIt

Before you can conduct victim system analysis you need to capture memory. Some form of dd, including MoonSols win32dd and win64dd were/are de facto standards but the recently released MoonSols DumpIt makes the process incredibly simple.

On a victim system (local or via psexec) running DumpIt is as easy as executing DumpIt.exe from the command-line or Windows Explorer. The raw memory dump will be generated and written to the same directory you’re running DumpIt from; answer yes or no when asked if you wish to continue and that’s all there is to it. A .raw memory image named for the hostname, date, and UTC time will result. DumpIt is ideal for your incident response jump kit; deploy the executable on a USB key or your preferred response media.

Figure 1:

Figure 1: Run DumpIt

Painless and simple, yes? I ran DumpIt on a Windows XP SP3 virtual machine that had been freshly compromised with SpyEye (md5: 00B77D6087F00620508303ACD3FD846A), an exercise that resulted in my being swiftly shunted by my DSL provider. Their consumer protection program was kind enough to let me know that “malicious traffic was originating from my account." Duh, thanks for that, I didn’t know. ;-)

Clearly, it’s time to VPN that traffic out through a cloud node, but I digress.

SpyEye has been in the news again lately with

USA Today Tech describing a probable surge in SpyEye attacks due to increased availability and reduced cost from what used to be as much as $10,000 for all the bells and whistles, down to as little as $95 for the latest version. Sounds like a good time for a little SpyEye analysis, yes?

I copied the DumpIt-spawned .raw image from the pwned VM to my shiny new SIFT 2.1 VM and got to work.

Volatility 2.0

So much excellent documentation exists for Volatility; on the Wiki I suggest you immediately read the FAQ, Basic Usage, Command Reference, and Features By Plugin.

As discussed in May 2010’s

toolsmith on SIFT 2.0, you can make use of Volatility via PTK, but given that we’ve discussed that methodology already and the fact that there are constraints imposed by the UI, we’re going to drive Volatility from the command line for this effort. My memory image was named HIOMALVM02-20110811-165458.raw by DumpIt; I shortened it to HIOMALVM02.raw for ease of documentation and word space.

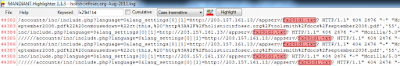

I executed

vol.py imageinfo –f HIOMALVM02.raw to confirm just that, image information. This plugin provided PAE (physical address extension) status as well as hex offsets for DTB (Directory Table Base), KDBG (short for _KDDEBUGGER_DATA64), KPCR (Kernel Processor Control Region), time stamps and processor counts.

Figure 2:

Figure 2: imageinfo plugin results

Windows XP SP3, check.

Runtime analysis of my SpyEye sample gave me a few queryable entities to throw at Volatility for good measure, but we’ll operate here as if the only information we have only suspicion of system compromise.

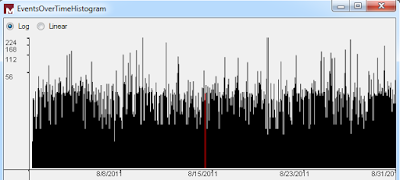

It’s always good to see what network connections may have been made.

vol.py --profile=WinXPSP3x86 connscan -f HIOMALVM02.raw

The connscan plugin scans physical memory for connection objects.

Results included:

Interesting, both IPs are in Germany, my VMs don’t make known good connections to Germany so let’s

build from here.

The PID associated with the second connection to 188.40.138.148 over port 80 is 1512.

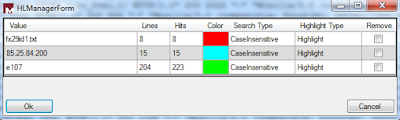

The pslist plugin prints active processes by walking the PsActiveProcessHead linked list.

vol.py --profile=WinXPSP3x86 pslist -P -f HIOMALVM02.raw

Use –P to acquire the physical offset for a process, rather virtual which is default.

Results included a number of PPID (parent process IDs) that matched the 1512 PID from

connscan:

I highlighted the process that jumped out at me given the anomalous time stamp, a 0 thread count and no handles.

Let’s check for additional references to cleansweep.

The

pstree plugin prints the process list as a tree so you can visualize the parent/child relationships.

vol.py --profile=WinXPSP3x86 pstree -f HIOMALVM02.raw

Results included the PPID of 1512, and the Pid for cleansweep.

Ah, the victim most likely downloaded cleansweep.exe and executed it via Windows Explorer.

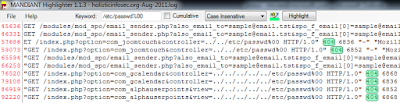

But can we extract actual binaries for analysis via the like of Virus Total? Of course.

This is where the malware plugins are very helpful. I already know I’m not going to have much luck exploring PID 3328 as it has no threads or open handles. MHL

points out that a process such as cleansweep.exe typically can't remain active with 0 threads as a process is simply a container for threads, and it will terminate when the final thread exits. Cleansweep.exe is still in the process list probably because another component of the malware (likely the one that started cleansweep.exe in the first place) never called

CloseHandle to properly "clean up." That said, the PPID of 1512 has clearly spawned PID 3328 so let’s explore the PPID with the

malfind plugin, which extracts injected DLLs, injected code, unpacker stubs, and API hook trampolines. The malware (malfind) plugins

don't come packaged with volatility, but are in fact a part of the above mentioned Malware Analyst's Cookbook; the latest version can also be

downloaded.

vol.py --profile=WinXPSP3x86 -f HIOMALVM02.raw malfind -p 1512 -D output/ yielded PE32 gold as seen in Figure 3.

Figure 3:

Figure 3: malfind plugin results

Malfind dropped each of the suspicious PE files it discovered to my output directory as .dmp files. I submitted each to Virus Total, and bingo, all three were malicious and identified as SpyEye variants as seen in Figure 4.

Figure 4:

Figure 4: PE results from Virus Total

In essence, we’ve done for ourselves via memory analysis what online services such as Threat Expert will do via runtime analysis. Compare this discussion to the Threat Expert

results for the SpyEye sample I used.

There is so much more I could have discussed here, but space is limited and we’ve pinned the VU meter in the red, so go read the Malware Cookbook as well as all the online Volatility resources, and push Volatility to the boundaries of your skill set and imagination. In my case the only limiting factors were constraints on my time and my lack of knowledge. There are few limits imposed on you by Volatility; 64bit and Linux analysis support are pending. Get to it!

In Conclusion

I’ve said it before and I’ll say it again. I love Volatility. Volatility 2.0 makes me squeal with delight and clap my hands like a little kid at the state fair. Oh the indignity of it all, a grown man cackling and clapping when he finds the resident evil via a quick memory image and the glorious volatile memory analysis framework that is Volatility.

An earlier comment from MHL bears repeating here. Volatility source code can be likened to “a Python version of the Windows Internals book, since you can really learn a lot about Windows by just looking at how Volatility enumerates evidence.” Yeah, what he said.

Do you really need any more motivation to explore and use Volatility for yourself?

There’s a great list of

samples to grab and play with. Do so and enjoy! As it has for me, this process will likely become inherent to your IR and forensic efforts, perhaps even surpassing other tactics and methods as your preferred, go-to approach.

Ping me via email if you have questions (russ at holisticinfosec dot org).

Cheers…until next month.

Acknowledgements

Mike Auty & Michael Hale Ligh of the Volatility project.

AAron Walters – Volatility lead